The use of artificial intelligence (AI) tools such as Chat-GPT by legal professionals in the context of dispute resolution is already a reality. This use will bring several changes to the way in which dispute resolution is conceived and structured. However, there are legal and ethical limits to this use. The main technical and ethical issues related to this use were examined in a webinar promoted by ArbTech on 16 May 2023. The main risks associated with this use and other issues related to the topic were also the object of the webinar. This was the first of a series of events in Spanish and Portuguese promoted by ArbTech[1].

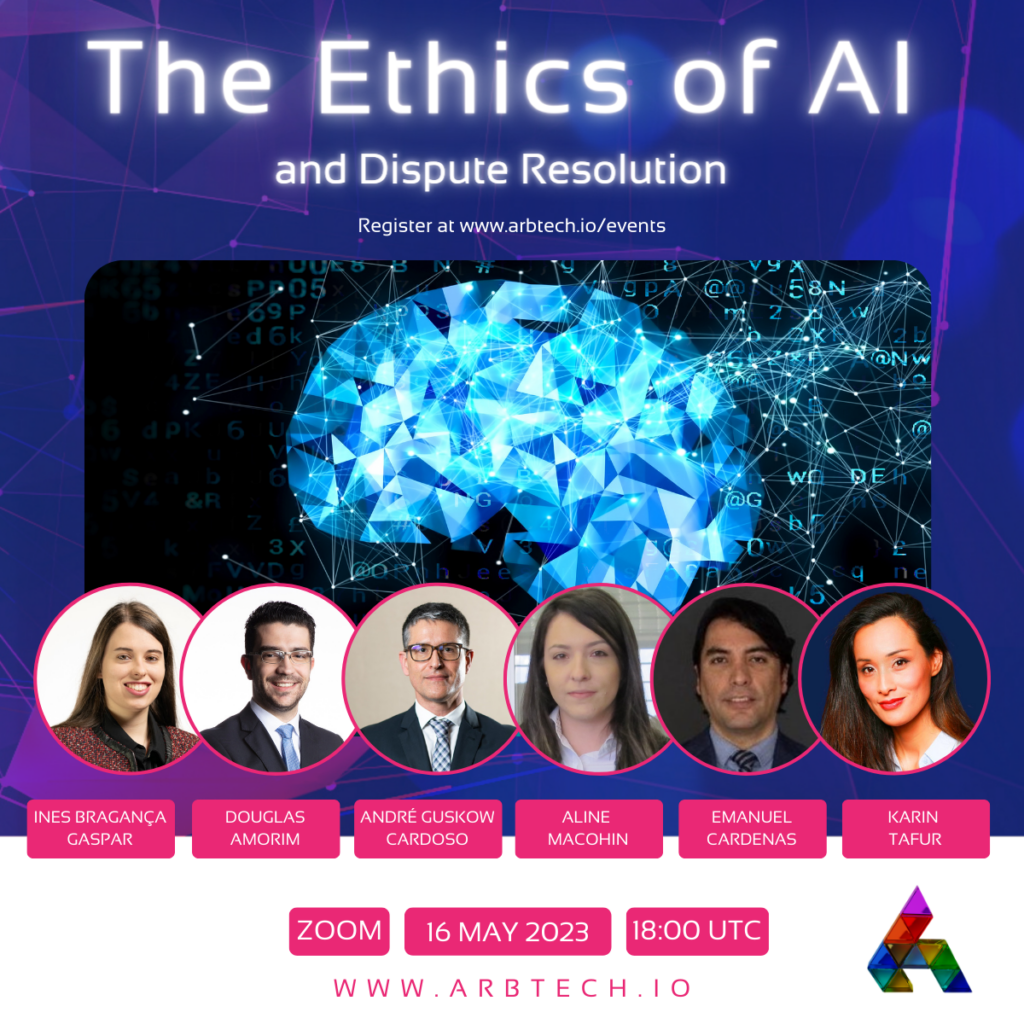

In a webinar organised by ArbTech on 16 May 2023, a panel of experts with both technical and legal backgrounds addressed these issues. The panel consisted of Aline Macohin, a Brazilian attorney with expertise in both legal and technical fields; Karin Tafur, a legal researcher and AI consultant; and Douglas Amorim de Oliveira, a Brazilian computer scientist who participated in a experience promoted by CIArb-Brazil[2] branch involving the use of the Large Language Model (LLM) Chat-GPT in a moot competition between a human team and AI.

The panel first examined the key ethical and legal issues that legal professionals engaged in dispute resolution should consider. Karin Tafur explored the current regulatory and governance landscape and highlighted a series of risks associated with the use of AI in general. Karin emphasised the risks of injustice arising from biases, security problems, and political and social instability, mentioning that AI models have the potential to exacerbate inequalities and discriminatory practices.

According to Karin, these tools face constraints such as a lack of innovation and creativity, overreliance on the quality and quantity of the training data, and intensive resource usage, which hampers their scalability. She also criticised the use of the term ‘hallucination’ for generative AI tools, arguing that hallucination is intrinsically linked to the human experience. However, AI models do create false information, and the requirement of explainability is important to counter the creation of false information and understand how certain results are reached.

Addressing biases in AI models, Karin emphasised the need to adopt cultural practices and conduct audits. She stressed the importance of diversity within teams working on AI systems and the need for both the data and the team responsible for the AI model to embrace diversity. Auditing mechanisms should be employed to mitigate risks, both before and after the implementation of the AI model.

Regarding the risk of (lack of) consent, it is crucial to ensure that the data used for the AI model is representative, obtained with proper consent, and without violating copyright or other rights. It is essential to verify that the models being used respect people’s privacy. Auditing and accountability processes should be employed to mitigate this risk. Governance processes for AI should be in place to ensure compliance with current legislation and standards, and to establish mechanisms that allow individuals to express and become aware of consent-related issues.

In terms of security, LLMs like Chat-GPT can be used for various tasks. However, there is a risk of hackers targeting these systems and conducting attacks known as ‘indirect prompt injections’, which aim to alter the behavior of the AI. To mitigate these risks, user education is crucial as people need to be trained in the responsible management of AI.

Furthermore, it is important to consider the environmental impact and the utilisation of natural resources when training new language models. The carbon emissions produced during training are significant, and the potential environmental risk should be considered.

As legal professionals, we must also consider the costs of using these systems for society and individuals. It is essential to take into account the negative externalities associated with these systems.

Douglas Amorim discussed the technical aspects of LLMs, providing a high-level overview of the attention mechanism and distinguishing it from earlier text generation approaches in AI. LLMs are large-scale models trained on massive volumes of data using deep learning mechanisms. They can be employed for text generation, synthesis, and text correction.

The attention concept is related to a mathematical concept that underlies the development of AI models by Google. Unlike older models that focused on individual words and their relationships with subsequent words, attention-based models use a technique that transforms words into feature vectors, enabling the establishment of semantic relationships between words within a sentence or text. When combined with large-scale data models, this technique proves highly efficient in generating sentences that are semantically similar to previous ones.

However, there are technical barriers that prevent legal professionals and arbitrators from fully utilising LLM tools for dispute resolution.

In the case of Chat-GPT, the primary context to consider is the dataset on which the model or tool was trained. This poses the first technical limitation. A substantial amount of data is necessary for the tool to be accurate and functional in a specific context. Even when Chat-GPT is specially ’fitted’ for a particular topic or sector, there will still be biases and long-term memory associated with the original training data. On the other hand, if a highly specialised dataset is used, it may not be dense enough to provide high-quality results.

In the context of dispute resolution, two significant limitations arise. The first is the computational cost of processing a sufficiently large dataset and providing it with specific context. The second is the possibility that current LLM models may deviate from the principles applicable to legal practice. The approach needs to be more focused, but the existing tools are more comprehensive.

Hallucinations and similar issues are another challenge. LLMs are primarily designed for text generation, and while the generated texts may be syntactically correct, their semantics may not correspond to reality. Hallucinations refer to the models’ ability to generate sentences and texts that appear confident, grammatically correct, but convey untrue or fictional messages.

According to Aline Macohin, generative AI tools can be highly useful for legal professionals. However, their use must be carefully considered and defined within specific contexts, such as law firms, arbitral tribunals, courts, and public institutions. It is crucial to determine whether the AI tool has been effectively used.

There are other tools, such as text mining, that generate varying degrees of ethical discussions. Searches and statistics on precedents and legal texts tend to generate less controversy. However, when the tool is used for decision-making and has the potential to harm someone, various ethical questions may arise. Each profession, including lawyers, has its own ethical code to follow, along with technical standards and laws in different countries.

One of the main challenges of generative AI tools is the lack of transparency. Without knowledge of how they were trained, and the data used for training, it becomes difficult to ascertain whether the generated text complies with legislation and ethical requirements.

Various countries and international entities have proposed principles for AI that emphasise transparency. Achieving transparency is not only a requirement in itself but also a prerequisite for achieving other proposed principles, ensuring that AI systems are responsible and reliable. Transparency instills confidence in the results provided by AI solutions.

Transparency is essential for all systems that impact citizens or restrict their rights. The IEEE 7001 technical standard[3] defines different levels of transparency that should be applied and adapted to the recipient. High-risk systems require greater transparency.

Transparency is also required for system recipients. Developers of deep learning AI systems must ensure transparency and avoid creating black boxes. Technicians should take actions to achieve transparency, explainability, and partial transparency of the system. This allows for more effective testing, risk identification, and mapping blind spots. By mapping risks, it is possible to establish controls and mechanisms to reduce or mitigate negative impacts.

Different recipients require tailored explanations. Individuals affected by a decision made using AI mechanisms and tools, particularly in dispute resolution, want to understand how that decision was reached. Supervisory bodies also require specific transparency to effectively oversee AI systems.

In dispute resolution systems, it is crucial to establish a minimum level of transparency, mapping risks, and finding solutions for potential negative effects. Ethical considerations, such as gender and race issues, should be considered, and transparency is necessary to address these concerns.

Current regulations and debates about the limitations of AI tools were also discussed. Generative models are subject to various regulations, and in Europe, discussions are ongoing regarding the regulatory framework for AI. Organisations and professional entities using these AI tools in Europe must pay attention to forthcoming regulations, especially the final EU Artificial Intelligence Act which is expected to be adopted until the end of 2023, as their impact extends beyond the European borders due to the ’Brussels effect’.

Discussions during the webinar have demonstrated the significant utility of employing AI tools in general activities and in dispute resolution. However, caution must be exercised, particularly regarding transparency, datasets control and awareness, and the risks of biases and prejudices. These risks should be avoided by implementing measures to mitigate them or circumvent their effects.

A prime example of such risk occurred days after the webinar, when a lawyer was reprimanded by a US court due to hallucinations included in a brief. As widely reported in the media, the use of Chat-GPT in that case resulted in the fabrication of non-existent precedents[4].

This only confirms the imperative for these issues to be extensively and effectively discussed by the legal community and professionals engaged in dispute resolution and arbitration. It may ultimately be concluded that language model-based tools are not yet mature for such usage. Achieving such maturity will likely only be possible through their conscious utilisation (regarding risks and potential negative issues) and comprehensive and frequent discussions concerning their application in a courtroom.

Want to see more from the Arbitration Blog? Click here.

[1] https://www.arbtech.io/

[2] CIArb – Human vs. Machine. Available at https://youtu.be/vnI4bHOkgFM

[3] https://standards.ieee.org/ieee/7001/6929/

[4]https://www.nytimes.com/2023/05/27/nyregion/avianca-airline-lawsuit-chatgpt.html?smid=nytcore-ios-share&referringSource=articleShare